Untying The Gordian Knot -- Initial Barcelona Benchmarks

I can't recall the last time that there was so much controversy surrounding the launch of a new product nor can I recall the last time that testing was so poor and analysis so far off the mark. Barcelona launches amid a flurry of conflicting praise and criticism. The problem right now is that many ascribe the positive portrayals of Barcelona to simple acceptance of AMD slide shows and press releases while those who are strongly critical seem to only have the poor quality testing for backing.

I can't really criticize people for misunderstanding the benchmarks since these have included similar poor analysis by the testers themselves. It is unfortunate indeed that there is not one single review that has enough testing to be informative. It is equally unfortunate that proper analysis requires some technical understanding of Barcelona (K10) which has been lacking among typical review sites such as Tech Report and Anandtech.

K8 is able to handle two 64 bit SSE operations per clock. It takes the capacity of two of the three decoders to decode the two instructions each clock. The L1 Data cache bus is able to handle two 64 bit loads per clock. Finally, K8 has two math capable SSE execution units, FMUL and FADD and is able to handle one 64 bit SSE operation on each per clock. Core 2 Duo, however, does better but it is important to understand how. Simply doubling the number of 64 bit operations from two to four would be impossible since this would require four simple decoders and four SSE execution units. C2D only has three available simple decoders and two execution units. C2D gets around these limitations by having execution units that are 128 bits wide which allows two 64 bit operations to be run in pairs on a single execution unit. Consequently, by using 128 bit SSE instructions, C2D only needs two decoders plus two execution units to double what K8 can do. Naturally, though, C2D's L1 Data bus also has to be twice as wide to handle the doubled volume of SSE data. This 2:1 speed advantage for C2D over K8 is not really debatable as it has been demonstrated time and time again on HPC Linpack tests. Any sampling of the Linpack peak scores will show that C2D is twice as fast. Today though, Barcelona's SSE has been expanded in a very similar way to Core 2 Duo's. Whereas two of K8's decoders could process two 64 bit instructions per clock, Barcelona's decoders can process 128 bit SSE instructions at the same rate. The execution units have been widened to 128 bits and are capable of handling pairs of 64 bit operations at each clock. Likewise, the L1 Data cache bus has been widened to allow two 128 bit loads per clock.

The reason it is necessary to understand the architecture is to understand how the code needs to be changed in order to see the increase in speed. Suppose you have an application that uses 64 bit SSE operations for its calculations. This code will not run any faster on K10 or C2D. Since both K10 and C2D have only two SSE execution units, the code would be bottlenecked at the same speed as K8. The only way to make these processors run at full speed is to replace the original 64 bit operations with 128 bit operations allowing the 64 bit operations to be executed in pairs. Without this change, there is no change in SSE speed. It becomes very important therefore to fully understand whether or not a given benchmark has been compiled in a way that will make this necessary change in the code.

Let's dive right into the first fallacy dogging K10: the claim that K10's SSE is no faster than what is seen on K8. This naive idea is easily proven false even with code that is optimized for C2D, Anandtech's Linpack test:

It is clear that this code is using 128 bit SSE for Xeon because it is more than twice the speed of K8. It also seems clear that Opteron 2350 (Barcelona) is using 128 bit SSE as well because it is also more than twice as fast as the pair of Opteron 2224's (K8). However, we can see that both paired Xeon 5160 (dual core C2D) and Xeon 5345 (quad core C2D) are significantly faster than Barcelona. This is because the code order is arranged to match the latency of instructions in C2D. However, when we look for a better test, Techware Labs Linpack test:

Barcelona 2347 (1.9Ghz) 37.5 Gflop/s

Intel Xeon 5150(2.6Ghz) 35.3 Gflop/s

The problem with this data is that the article does not make clear exactly what the test configuration is. We can infer that Barcelona is dual since the motherboard is dual. We could also infer that 5150 is dual since 5160 was dual. But this is not stated explicitly. So, we either have a comparison where the author is correct and Barcelona is faster or we have Barcelona using twice as many cores to achieve a similar ratio to what we saw at Anandtech.

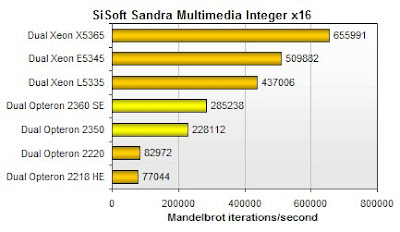

This Sandra benchmark shows no increase in speed for C2D so it is clear that it is not using 128 bit instructions. Since the SSE code is not optimized there is no reason to assume that the Integer code is either. Therefore, we have to discard both the Whetstone and Dhrystone scores.

Tech Report Sandra Multimedia

Normalizing the scores for both core count and clock speed shows tight clustering in three groups. Using a ratio based on K8 Opteron gives:

K8 Opteron - 1.0

K10 Opteron - 1.6

C2D Xeon - 1.9

These ratios appear normal since C2D is nearly twice K8's speed. Also, K10's ratio is about what we would expect for code that is not optimized for K10. Properly optimized code for K8 should be a bit faster. This is similar to what we saw with Linpack.

Normalizing the scores for both core count and clock speed shows tight clustering in three groups. Using a ratio based on K8 Opteron gives:

K8 Opteron - 1.0

K10 Opteron - 1.9

C2D Xeon - 3.7

Barcelona's score compared to Opteron is what we would expect at roughly twice the speed. The oddball score is C2D since it is roughly four times Opteron's speed. This type of score might lead to the erroneous conclusion that C2D is nearly four times faster than K8 and still roughly twice the speed of Barcelona. Such a notion however doesn't stand up to scrutiny. A thorough comparison of K10 and K8 instructions shows that the only instructions that run twice as fast are 128 bit SIMD instructions. There is no doubt therefore that K10 is indeed using 128 bit SIMD. It is not of any particular importance whether K8 is using 64 or 128 bit Integer instructions since these run the same speed. This readily explains K10's speed. However, C2D's is still puzzling since its throughput for 128 bit Integer SIMD is roughly the same as K10's. The only clue to this puzzle is the poor code optimization seen in the previous SSE FP operations. Given that Sandra does exhibit poor optimization for AMD processors (and sometimes for Intel as well). We'll have to conclude that the slow speed of the code on AMD processors is simply due to poor code optimization. Someone might be tempted to attribute this to the difference actual differences in architecture such as C2D's greater stores capacity. However, this is clearly not the case as K8 has the same proportions and is still only one quarter the speed. In other words, if this were the case then K8 would be half the speed and then K10 would be bottlenecked at nearly the same speed. Given the K8/K10/C2D ratios the most likely culprit is code that is heavily optimized for C2D but which runs poorly on AMD processors.

We do have a second benchmark from the Anandtech review that is interesting.

"The LINPACK and zVisuel benchmarks make it clear that Intel and AMD have about the same raw FP processing power (clock for clock), but that the Barcelona core has the upper hand when the application has to access the memory a lot."

Unfortunately, because these benchmarks did favor Intel we can't say for certain what Barcelona's top speed truly is. So, the initial benchmarks for Barcelona are not really indicative of its performance. Over time we should see reviews and benchmarks that show K10's true potential. If from no other source, HPC Linpack scores on supercomputers using K10 will eventually show K10's true SSE FP ability. Since SSE Integer operations have the same peak bandwidth with 128 bit width, this would also indicate maximum Integer speed as well. With K10's base architectural changes confirmed it should just be a matter of time before the reviews catch up. This should also mean that for AMD it is primarily a matter of increasing clock speed above 2.5 Ghz to be fully competitive with Intel.

86 comments:

I believe my analysis of the current reviews is accurate however this being one week after launch we may see some additional information being published. If something substantial turns up that contradicts the initial reviews I can always update the main article.

Excuse me but what exactly are those 64bit SSE instructions you are talking about? I know that you can do 4x 32bit FP or 2x64bit FP calculations but they should execute instructions at same speed, just that with 64bit you only have two numbers instead of four.

ho ho

128 bit and 64 bit SSE instructions are listed in Appendix C of the Intel® 64 and IA-32 Architectures Optimization Reference Manual.

128 bit SIMD are in table C-3 and C-3a.

64 bit Integer SIMD is table C-6 and C-6a.

64 bit MMX is table C-7 and C-8.

AMD lists these instructions also in Appendix C of the Software Optimization Guide for AMD Family 10h Processors

128 bit media instructions are C.4.

64 bit media instructions are C.5.

From what I know Intel doubled the throughput of all XMM registers, that means all SSE and no MMX as MMX uses a different set of registers.

The reason I asked was that you said "Suppose you have an application that uses 64 bit SSE operations for its calculations. This code will not run any faster on K10 or C2D. Since both K10 and C2D have only two SSE execution units, the code would be bottlenecked at the same speed as K8"

This is wrong. If your code is running in XMM registers then it will be twice as fast on Core2 than it was on K8, K10 should be as fast as Core2. It won't matter if you use 8/16/32/64bit integers or 32/64bit floating point numbers, speedup will always be the same.

If you made a typo and meant that programmers should switch from MMX to SSE then yes, you are correct that those instructions will be slower than SSE on both, Core2 and K10.

Here is the Intel Manual, May 2007:

Chapter 5

The collection of 64-bit and 128-bit SIMD integer instructions supported by MMX technology, SSE, SSE2, SSE3 and SSSE3 are referred to as SIMD integer instructions.

Code sequences in this chapter demonstrates the use of 64-bit SIMD integer instructions and 128-bit SIMD integer instructions.

Execution of 128-bit SIMD integer instructions in Intel Core microarchitecture are substantially more efficient than equivalent implementations on previous microarchitectures. Conversion from 64-bit SIMD integer code to 128-bit SIMD integer code is highly recommended.

Here is the AMD K10 Manual, September 2007:

Chapter 9

The 64-bit and 128-bit SIMD instructions—SSE, SSE2, SSE3, SSE4a instructions—should be used to encode floating-point and packed integer operations.

AMD Family 10h processors with 128-bit multipliers and adders achieve better throughput using SSE, SSE2, SSE3, and SSE4a instructions.

ho ho

Your understanding is incorrect. K8 is capable of decoding and executing two 64 bit instructions per clock; C2D can't do this any faster.

I've now given you direct references from both manuals showing conversion to 128 bit instructions is recommended to increase speed. I think you should be up to speed now.

aguia

Yes, additional benchmarks will be good. We'll have to see if more reviews are done.

savantu & axel

I know you did not get your configuration from the article because it isn't listed.

Since the author states that Barcelona is faster I assumed that the number of cores was the same. However, considering that the authors at both Tech Report and Anandtech show a lack of technical understanding there is no reason to assume that the author there is any more knowledgable.

I edited the main article to show that the configuration and therefore the interpretation is not clear. I also added the zVisuel graph.

Scientia said in the blog post

"It is clear that this code is using 128 bit SSE for Xeon because it is more than twice the speed of K8. It also seems clear that Opteron 2350 (Barcelona) is using 128 bit SSE as well because it is also more than twice as fast as the pair of Opteron 2224's (K8)."

Looking at the graph shown I read it as not being twice as fast, seems to be less than that.

I agree with your overall analysis that the full picture has not emerged yet, indeed the reviews have been very scrappy. The support hardware seems full of bugs with unstable early BIOS ( read the Xtremesystems AMD thread on this for more details ). Also the testers had very little time. AMD has to take some of the blame for this though.

Yes, AMD will definitely have to get above 2.5GHz. Intel will be demo'ing Penryn at IDF today onwards and from what I have heard it probably has 400-500Mhz ontop of 65nm Core2 in headroom. I would say Intel could do 3.33 or 3.2 versions so AMD will have to get to around 2.7-2.9.

Scientia said...

Since both K10 and C2D have only two SSE execution units, the code would be bottlenecked at the same speed as K8.

Conroe has 3 128bit wide SSE units thus your entire article draws conclusions from invalid information. Sorry.

Source #1

Source #2

scientia

"Your understanding is incorrect. K8 is capable of decoding and executing two 64 bit instructions per clock; C2D can't do this any faster."

Are we talking about floating point or integer math? Intel manual only talks about 64 vs 128bit integer math. LINPACK is a floating point program but you said: "It is clear that this code [LINPACK] is using 128 bit SSE for Xeon because it is more than twice the speed of K8"

So, is there any kind of difference between 64/128bit floating point SIMD on Core2? If so then please tell me where could I read about it as from the first look I couldn't find it from their manual.

"zVisuel gives Intel an unfair advantage since it is, "a benchmark which is very SSE intensive and which is optimized for Intel CPUs." Barcelona nevertheless takes the lead"

As you see Barcelona has much bigger lead in 2P. As a platform Barcelona does have an edge over Core2 thanks to having more bandwidth and lower latency (no FBDIMM). Though when comparing pure CPU performance things are not as clear.

Another interesting thing is that AMD will release tripple core CPUs. That would only make sense if they can salvage enough broken quadcores that would work with one core disabled. Kind of makes me wonder what their quadcore yeld is if they have enough croppled ones to sell as a whole new CPU line.

Well, anyone can sign up to download AMD's ACML (AMD Core Math Libraries) package and run an AMD-optimized Linpack test.

http://developer.amd.com/acml.jsp

I've been thinking of trying it on my Opteron 185 just for grins. Would be interesting to see how the AMD library performs on a variety of systems...

Axel

Do you have any points beyond those that have already been identified and corrected? Bring your comments up to date.

"So why did you delete our comments and thereby make it impossible for other readers to follow this illuminating discussion?"

I'll be happy to leave anything that adds to the discussion.

"I'm once again resorting to the rather tiresome tactic of copying this post onto Roborat's"

Feel free.

Nehalem at IDF:

http://www.fudzilla.com/index.php?option=com_content&task=view&id=3088&Itemid=35

Azmount

"Conroe has 3 128bit wide SSE units"

I seem to be spending a lot of time in this thread educating first ho ho and now you about SSE. Why not? Try getting your information directly from Intel and AMD instead of from an article. Intel's architecture has the limited function SSE Branch unit while AMD's architecture contains the very similar FSTORE unit. Neither unit is capable of performing the common SSE instructions which is why I didn't include them in the article. If you don't believe this you can read it in the actual optimization guides I linked to above. Again, for the great majority of 128 bit SSE instructions both C2D and K10 can do 2 per clock. For a tiny subset of instructions both C2D and K10 can do 3 per clock.

"thus your entire article draws conclusions from invalid information."

No. Rather now maybe you understand SSE and both C2D and K10 architecture better.

giant

Thank you.

For comparison, Penryn samples are first mentioned Xbitlabs November 27, 2006. Assuming Nehalem is similar, that would be about September 2008 for production. This would mean that Intel could hit the tail end of Q3 08. This would mean Shanghai would not be out much before Nehalem.

ho ho

You can check the Linpack scores on the Top 500 list yourself if you like. C2D is twice as fast per core as K8. Again, K10 is also twice as fast.

Yes, I've read the Triple Core announcement. It is not currently known if AMD put the L3 cache in place of one core to make the die smaller or whether the X3's are quad cores with one core disabled. A different die arrangement is not that difficult if AMD has a modular core now. I have no way of knowing however without a statement from AMD or a die shot.

"Kind of makes me wonder what their quadcore yeld is if they have enough croppled ones to sell as a whole new CPU line."

However, I do have to correct your statement about yields. If the yield were that low and these all came from quad cores then AMD would have enough to release in Q4 rather than Q1. The Q1 release either means a fairly good yield or possibly a different die arrangement.

The triple core is rather clever though from a marketing point of view in that it pins Intel between product cycles much as Intel did AMD with Kentsfield. This goes a long way to eliminate the argument about Intel's squeezing AMD on prices since AMD can always undercut Intel with X3 while setting X4 slightly higher.

It has now been suggested that X3 is indeed an X4 with one core disabled. Apparently AMD is saying that it can get higher clocks this way. This is possible if one core is not as good as the others.

ho ho

BTW, aren't those Conroe-L's just regular Conroe's with one core disabled? I wonder which would have more value, a Conroe + a Conroe-L or one X3.

I don’t understand the whining about the X3.

If AMD disabled the one core in the X4, so what?

You want me to list what it is disabled in these Intel products:

-Celeron M4xx

-Pentium E2xxx

-Core 2 Duo E4xxx

-Core 2 Duo E6xxx

-Expect lots of Penryn’s with something disabled to.

That doesn’t mean bad yield for Intel too?

And really really bad yield indeed, since it’s in the high volume parts we see this products with something disabled?

scientia

"Assuming Nehalem is similar, that would be about September 2008 for production."

I wouldn't be so sure if it is similar. Penryn is a whole new manufacturing technology and for that it is not the CPU design that holds release back but the fact that the fabs are still being ramped/built/converted. Nehalem is "simply" an update that will not need any major work at the fabs.

"This would mean Shanghai would not be out much before Nehalem."

For the exact same reason I think Nehalem could be here before a year has passed I would think Shanghai might not be introduced that fast. Even if it would I doubt it would pose any threat to performance lead because if AMD continues what it does now then for the first year or so 45nm will mostly be just for power saving and not for the highest performing chips.

"You can check the Linpack scores on the Top 500 list yourself if you like. C2D is twice as fast per core as K8. Again, K10 is also twice as fast."

I still don't understand if your talk about 64/128bit SSE had anything to do with floating point math or not. So please could you tell me if you saw any kind of difference with floating point or was it only with integer code that SSE didn't get 2x speedup with Core2 and Barcelona?

"A different die arrangement is not that difficult if AMD has a modular core now."

It probably isn't too difficult to produce but it would not be all that smart either. Removing a core from a quadcore won't bring the manufacturing price down by 25%. AMD should be lucky to get 10% down as L3 cache takes quite a lot of die area. Because of that I'm quite sure they are crippled quadcores and not a whole new die.

"If the yield were that low and these all came from quad cores then AMD would have enough to release in Q4 rather than Q1."

It would also be logical to release them in Q1 when it produces only a small amount of them this year so that there wouldn't be left enough for x3 release. Also the fact that big sellers as IBM won't sell you Barcelona based servers before half November kind of hint that this might be the case.

"This goes a long way to eliminate the argument about Intel's squeezing AMD on prices since AMD can always undercut Intel with X3 while setting X4 slightly higher."

Could you give an educated guess on what level would AMD price those tripplecores?

"BTW, aren't those Conroe-L's just regular Conroe's with one core disabled?"

To be honest I haven't really studied it. My guess is they could be crippled dualcores.

"I wonder which would have more value, a Conroe + a Conroe-L or one X3."

Well, Conroe-L also has different FSB and L2 cache so it would be difficult to put two of them together. Technically it would be possible. They do have hinted that if they feel the need they can do it.

In terms of performance I don't think Intel MCM tripplecore would be much worse than AMD X3 if anandtech latency benchmarks are believeable. Also it would make it possible for Intel to rise clock speeds a bit.

From Hans de Vries the AMD Phenom X3:

aceshardware

“Microsoft is excited to see AMD creating new technologies like the AMD Phenom triple-core processors,” said Bill Mitchell, corporate vice president of the Windows Hardware Ecosystem at Microsoft Corp. “We see potential for power and performance improvements through triple-core processing in the industry and are exploring with AMD the possibility of taking advantage of this in the Microsoft family of products.”

AMD Adds Multi-Core Triple Threat to Desktop Roadmap

I don’t remember Microsoft making a statement about Intel disabling one of the cores in their Core 2 Duo processors. No doubt this is something huge for the industry.

Soon we will see Opteron’s with 3 cores for sure.

More products, more prices, more opportunities.

Ho Ho

"Penryn is a whole new manufacturing technology ... Nehalem is "simply" an update"

You actually have that backwards: Penryn is not much more than a die shrink while Nehalem supposedly has a lot of changes. However, the one year from tapeout timeline has been shown over and over again so that remains the best estimate.

"Even if it would I doubt it would pose any threat to performance lead"

Well, it is clear where your biases are. First you assume that Intel will still be in the lead and then you assume that Nehalem will be better. You could just as easily assume that Intel will always be in the lead. The truth is that while Nehalem does have advantages Intel will have to deal with CSI, IMC, and a monolithic quad die all of which will be disadvantages in terms of power draw, cost, and yield.

"I still don't understand if your talk about 64/128bit SSE had anything to do with floating point math or not. So please could you tell me if you saw any kind of difference with floating point or was it only with integer code that SSE didn't get 2x speedup with Core2 and Barcelona?"

Once again. Both FP and Integer SSE can be twice as fast on C2D as K8. K10 also supports twice the speed as K8 in both Integer and FP SSE.

"It probably isn't too difficult to produce but"

It has now been suggested that X3 will be based on X4 dies.

"Because of that I'm quite sure they are crippled quadcores and not a whole new die."

As I already mentioned before you posted this.

"It would also be logical to release them in Q1 when it produces only a small amount of them this year so that there wouldn't be left enough for x3 release."

I'm not quite sure what you are trying to say. I think maybe you are trying to claim that AMD won't produce very many K10's. However, AMD's production ratio of K10 should be ahead of Intel's production ratio of Penryn until Q2 08.

"Also the fact that big sellers as IBM won't sell you Barcelona based servers before half November kind of hint that this might be the case."

Not especially. IBM and HP both have very strong server offerings. Dell would be a better indicator.

"Could you give an educated guess on what level would AMD price those tripplecores?"

I would assume midway between dual and quad at the same clock. Apparently, they will only be used for the desktop.

"Well, Conroe-L also has different FSB and L2 cache so it would be difficult to put two of them together."

No, you misunderstand. If AMD gets a bad core with X4 it can sell it for a lower price as X3. The equivalent for Intel would be one bad core out of two dies. This would mean one Conroe plus one Conroe-L instead of one Kentsfield.

"In terms of performance I don't think Intel MCM tripplecore would be much worse than AMD X3"

True since 3 cores would improve Intel's memory bandwidth.

aguia

"More products, more prices, more opportunities."

You forgot "more confusion" as that would mean even more possible choices for the people :)

Within the range of 300MHz AMD has made around 7 different quadcore CPU models. Just imagine what will happen by the time they finally get 500MHz+ faster and add 2/3 core models to their product line.

Btw, I linked to the same press release several hours ago.

As for MS praising this as something huge I wouldn't make so big deal out of it. This is a press release coming from AMD during the time their marketing doesn't have a whole lot to show off. Because of that they will try to make every little new thing to look like the best thing ever. It has been done before by pretty much everyone.

aguia

Thanks for the Aces link. I read the comments. Not surprisingly, Jack is saying (because Intel didn't think of it first) that three core is a bad idea. Some of the arguments go beyond metaphysical. I think most people can understand that three cores are more than two and that three are cheaper than four.

The arguments about Intel's being able to copy X3 may or may not be correct. See, I know for a fact that all K8 dies are the same. There is no real difference between an Athlon 64 and an 8xxx Opteron. There is a license register on the die (which is not visible to the programmer) which is read by the processor's firmware. This license register tells the chip how many ccHT links it can create.

0 = Athlon 64/Sempron/Turion

1 = Opteron 2xxx

2 = undefined

3 = (all) Opteron 8xxx

This also means that X3 could be used in Opteron if AMD chose to market it that way.

If Conroe is the same as Clovertown (and includes MP firmware) but is artificially limited as K8 is then it may be easy for Intel to copy X3. Does anyone have similar information about C2D as I mentioned for K8?

scientia

"Penryn is not much more than a die shrink while Nehalem supposedly has a lot of changes."

Yes but tell me why doesn't Intel release Penryns sooner, is it because the CPU itself is not ready yet or that 45nm doesn't have enough capacity?

"Well, it is clear where your biases are"

Well, this is simply my educated guess. Only problem is our guesses are different.

"Once again. Both FP and Integer SSE can be twice as fast on C2D as K8. K10 also supports twice the speed as K8 in both Integer and FP SSE."

Yes, that's what I know too. Thats also the reason why I was quite confused when you started talking about 64bit vs 128bit SIMD with LINPACK, a FP benchmark. It shouldn't have anything to do with 64bit SIMD being at the same speed as on older CPUs.

"As I already mentioned before you posted this."

Yes, you did it while I was writing mine, I didn't want to delete and edit my post after seeing yours.

"I'm not quite sure what you are trying to say. I think maybe you are trying to claim that AMD won't produce very many K10's."

This is exactly what I was trying to say. Few quadcores would mean few tripplecores.

"However, AMD's production ratio of K10 should be ahead of Intel's production ratio of Penryn until Q2 08."

If you define ratio as percentage of CPUs from all their respectine production then this might indeed be so. In terms of raw number of CPUs I'm not quite sure if AMD will have the lead. By Q2 next year Intel should be producing more 45nm CPUs than entire AMD CPU output.

"Not especially. IBM and HP both have very strong server offerings. Dell would be a better indicator."

So when could one get a Barcelona based server from Dell?

"True since 3 cores would improve Intel's memory bandwidth."

Actually I was hinting to the thing that sending data between cores in Barcelona seemed to be as slow as when Intel MCM CPU sends data between two dies using FSB. Of course it will also help Intel a bit to have less cores to feed with FSB.

ho ho

"Yes, that's what I know too. Thats also the reason why I was quite confused when you started talking about 64bit vs 128bit SIMD with LINPACK, a FP benchmark. It shouldn't have anything to do with 64bit SIMD being at the same speed as on older CPUs."

Then you are still confused. Intel would need 4 FP execution units (which it doesn't have) to double the speed of 64 bit operations. Doubling the speed requires the use of 128 bit operations.

"If you define ratio as percentage of CPUs from all their respectine production then this might indeed be so. In terms of raw number of CPUs I'm not quite sure if AMD will have the lead."

No, using raw numbers would be silly since Intel's volume share is 3X greater. You need to use ratio because this is proportionate to each manufacturer's customer obligations. Using raw numbers would mean that AMD would not have an abundance of chips until their chip volume was about equal to their maximum production capacity and this would clearly be incorrect.

"By Q2 next year Intel should be producing more 45nm CPUs than entire AMD CPU output."

Yes, that is certainly possible. But again, the raw numbers are not important.

"So when could one get a Barcelona based server from Dell?"

Okay, basic rule of thumb. If chips or systems are not available within one month of "release" then it wasn't a genuine launch. As I recall, Woodcrest was available in one month but Conroe had shortages for two months.

"Actually I was hinting to the thing that sending data between cores in Barcelona seemed to be as slow as when Intel MCM CPU sends data between two dies using FSB."

No, this definitely is not true.

Communication between sockets though is hardly any faster for AMD than Intel with HT 1.0. Communication on-die is noticeably faster.

Thanks for the Aces link. I read the comments. Not surprisingly, Jack is saying (because Intel didn't think of it first) that three core is a bad idea. Some of the arguments go beyond metaphysical. I think most people can understand that three cores are more than two and that three are cheaper than four.

Well I think the fact that the cheapest Intel dual core costing 75$ and the cheapest quad core costing 280$, leaves a huge price difference of more than 200$ between the two lines, there is a huge market price to fill, triple core for 200$, 150$? Not a bad deal.

Ho ho,

You should be happy with this; maybe this will force Intel to launch quad core cores with 4MB L2.

What do you think?

Confirmed! NEHALEM IS RUNNING!

http://www.tgdaily.com/content/view/33913/118/

8 cores! 32nm silicon wafers shown off as well! Intel's execution has been totally flawless lately. While AMD stumbles around with 2Ghz CPUs and boasts about three core CPUs Intel goes and does this.

Now a brief comment on the tri-core CPU. It's pointless. There is zero point in a three core CPU. With Penryn quad core CPUs will drop to ~$200. Between dual cores starting at ~$70 and going up from there with the larger cache, clockspeeds and bus speeds to $200 where the quads will start.

If anyone can use all the power of a quad core CPU Newegg has them now for $279.

You should be happy with this; maybe this will force Intel to launch quad core cores with 4MB L2.

No chance. The quads need all the cache they can get. Kentsfield cores have 8MB L2, Yorkfield has 12MB L2.

Nehalem is 731M transistors for a monolithic quad core part. This is down from a total of 820M transistors for a dual die Yorkfield quad core CPU.

Each dual core die on Yorkfield is 107mm squared. It's easy to work out that Nehalem will have a die of roughly 190mm squared. Of course this isn't perfectly accurate, it's just a few shoddy calculations.

But it does show that Intel was waiting with good reason for a manageable die size. The same design on 65nm would have been HUGE. Waiting for 45nm for monolithic quad core was the right decision for Intel to make.

scientia

"Intel would need 4 FP execution units (which it doesn't have) to double the speed of 64 bit operations."

I went back to the Intel manual and finally realized your mistake: when you are talking about 64bit SIMD/SSE you are actually confusing it with MMX. Just look at what units and registers are written in the tables C6/6a.

For real SSE integer improvement see tables C3/3a. For 64bit (double percsision) FP see C4a, for 32bit (single percision) FP see C5a. In all of those tables you can see latencies and throughput of Core2 CPUs under 06_0FH and most math instructions (add, mul, div) are running twice the speed compared to older ones, even the double percisision ones. I wonder where did you got the idea Intel couldn't double its double percsision SIMD throughput.

Also SiSoft Sandra integer benchmark makes soem sense as there are instructions that got not two but three times faster on Core2 compared to older CPUs. There are not many of these but I wouldn't be surprised if mandelbrot uses those.

"Communication on-die is noticeably faster."

I have Anandtech benchmarks backing me up, what do you have besides assuming that native should automagically make things superior?

Do you perhaps have any comments on first Nehalems rolling out from fabs three weeks ago or functional 32nm sram chips? When was that AMD was supposed to show Shanghai if it was on target to releasing it early H2 next year? As you said it takes about a year from chips to selling them and from what I know there is no Shanghai shown thus far, wouldn't that make it possible that Nehalem will be here (long) before it?

Also was it 32nm when AMD was supposed to catch up with Intel? If so then how soon are we going to see 32nm sram from AMD?

aguia

"maybe this will force Intel to launch quad core cores with 4MB L2."

There have been rumours talking about lower clocked desktop. I wouldn't mind seeing 2.13GHz quad for around $200-220. If that would happen I doubt Intel would also cut down on cache size as quadcores benefit from it more than dualcores do.

"working nehalem?" I'm sorry, but working means running, not just a die. Also, Nehalem is not "in production" it's in testing, at one of Intel's development centers where they can do test runs.

Mo, it's not possible for AMD to launch in the same way Intel is capable of because it has nothing like D1D. It simply doesn't have, and has never had, the capital to invest in a fab like that. C2D and the penryn parts we'll see before 2008 are all from that fab, or fabs like it, which is why there were shortages of C2D. In that sense, that was not a full production launch, and in 1 month, we didn't see many, if any, chips from a full production fab. I'd say, that it can be argued that that qualifies as a paper launch. However, it's, at best, questionable, because to define it, we get into the semantics of what a "paper launch" is.

As was mentioned on the previous article's comments, ACER currently has Barcelonas and is apparently launching products with them soon (though I may be incorrectly inferring that). If this is the case, then we already know this is not a paper launch.

Also mo, if you'll reflect back, much of the same FUD surrounded the 65nm launch and the 4x4 launch. While 65nm was not launched in any way in the channel, it was available in any HP or Dell with an AMD processor sold at that time.

Point being, if you're disappointed or discouraged that AMD is not launching like Intel, it's likely you were trying to be that way.

I really don't see how people see 3 cores as stupid. As a very poor college student, the idea is awesome to me. I get 1 more core for 1 more thread, and I get the speed upgrade of the new architecture, and I also get higher clocks than with 4 cores. Chances are, the fourth thread would be wasted anyway, as with games there are scripts, the OS in the background, and physics, and with my technical apps, I've usually got 2 intensive programs running at the same time, and no more (because there's no screen space and no need) so the other core keeps with the OS. The fourth core would simply sit around generating heat in my case, and would impede higher clockspeeds with optimal cooling.

Azmount, you of all people, should know that popular opinion is nothing close to the truth when you've got a marketing giant like Intel against a company like AMD that investors already hate for making the market less predictable, and that the fanchildren hate for giving them an extra choice to have to mull over.

Giant, while I realize your commentary is often valid and helpful, maybe you should try calming down a bit and drinking something soothing before you post. I'm no literature major, but your tone is just ridiculous in anything you write. It's like someone's given you the reigns to the Inquisition, and you're trying to sick it on AMD.

Really, this applies to axel and mo, and sometimes even hoho (though to a much lesser extent). Just try writing with a bit more neutral tone, and it'll be easier to deal with your arguments more productively and I'm sure scientia will stop deleting your posts.

Greg

I really don't see how people see 3 cores as stupid.

In my opinion, it is nothing more than marketing and Intel will end up having have a Quad-core at it's price point just for more pressure.

The funny thing to me is AMD knows they are in trouble on the desktop and are now trying to make a new segment for themselves.

Look at it this way...

Do you believe that AMD has enough additional capacity to make these Quad-core's with one disabled, or is this an attempt to salvage what ever they can?

Azmount Aryl

"But you did include pics clearly showing C2 taking off comparing to K10, exactly because of that extra SSE unit:"

Again, your interpretation is wrong. The "extra" SSE unit is very limited in its functionality and does not significantly increase C2D's SSE performance. Again, AMD's FSTORE unit is very similar.

"then using pics showing 100% opposite"

The pics do not show what you are claiming.

"And I'd like to know when will you stop claiming K10 being faster at the same clock comparing to C2."

Why would I? Proper testing should eventually show this. The testing has been pretty dismal so far.

"it is not your opinion that counts but the opinion of the masses."

Then it's good I have comments from someone like you who speaks for the masses.

" I'm sorry, but working means running, not just a die. Also, Nehalem is not "in production" it's in testing, at one of Intel's development centers where they can do test runs.

Why don't you take a look yourself? http://www.fudzilla.com/index.php?option=com_content&task=view&id=3122&Itemid=51

The demos is of two quad cores CPUs with 2 threads/core. They ran some sort of 3D program. I don't know exactly what it is.

Of course Nehalem is not in full production. They're just doing the test runs at D1D. Full scale production isn't until next year.

The fact that Intel's 45nm ramp is relatively slow is actually a good thing for Intel. During Q4 and Q1 is when the yields are worst and this is when volume is lowest. By Q2 both yields and volume should be up. AMD's ratio of K10 will still be higher but this is not an important distinction as Intel's Q2 volume should be high enough to make the contest truly Penryn versus K10.

Although some keep trying to repeat raw numbers (like some kind of mantra) this is not a logical comparison. Both Intel's and AMD's vendor obligations are related to marketshare, not total numbers. Also, the effect on ASP and gross margin is related to ratio, not raw numbers. The important comparison between K10 and Penryn is ratio or percentage of production rather than raw numbers or total numbers.

As I've mentioned before, there is still no tapeout announcement on Shanghai. Logically, Shanghai's tapeout should have come before Nehalem's. This could mean that Nehalem arrives before Shanghai. This would also mean that AMD's 45nm has slipped by a quarter.

Yes, Intel's execution (except for C2D above 3.0Ghz) has been on track. The area where Intel will face the most serious competition is in servers. AMD's server volume was cut roughly in half so AMD is likely to have large gains. This is far more important for AMD than it is for Intel since a 66% gain for AMD is only a tiny 12% loss for Intel.

enumae

Your point about the volume of tri-core is of course correct in terms of downgraded quad dies. But you need to keep this in perspective. The number of Kentsfields that Intel has sold to date is tiny. Likewise the great majority of new HPC systems used Woodcrest rather than Clovertown. However, as small as Intel's quad core market was the initial market for tri-core is even smaller. AMD currently only plans this for the desktop. It's just a way of getting value out of cores that would otherwise be a loss. The arguments about demand are not really valid since the volume is small. The argument about limited volume is not entirely correct since AMD could run off an actual tri-core die if demand were high enough.

Nehalem's transistor count obviously shows a big reduction in cache. Roughly, it looks very similar to Clovertown with the extra transistors accounting primarily for IMC and CSI (although some could be for as yet unannounced new functionality). This would suggest a cache size similar to Clovertown.

Ho Ho

"I went back to the Intel manual and finally realized your mistake: when you are talking about 64bit SIMD/SSE you are actually confusing it with MMX."

No, you are actually incorrect but at least you are trying.

"For real SSE integer improvement see tables C3/3a. For 64bit (double percsision) FP see C4a, for 32bit (single percision) FP see C5a. In all of those tables you can see latencies and throughput of Core2 CPUs under 06_0FH and most math instructions (add, mul, div) are running twice the speed compared to older ones, even the double percisision ones."

Okay, I'll explain your mistake.

For Intel we have table C-4 which is labeled as "Streaming SIMD Extension 2 Double-precision Floating-point Instructions"

Presumably you interpret this as double precision rather than 128 bit. This table includes the instruction:

MULPD

which we can see in table C-4a is 4X faster than in the older Pentium M and now has a througput of 1/cycle in C2D. This seems to match your reasoning that C2D is actually 4X faster. This is logical and I can see how you would make this mistake.

Now, let's look at the K8 Guide. We find MULPD listed in table 19 in the SSE2 instructions with a throughput of 1 every 2 cycles. Obviously this is half of C2D. This of course refutes your claim that C2D is actually 3X or 4X faster. But, nonetheless you could still naively think that C2D is twice as fast for double precision.

Now, let's look at the K10 guide. We find MULPD in table 15 listed as a 128 bit media instruction. In K10 it has a throughput of 1/cycle. Again, this matches what I said that both C2D and K10 are twice as fast as K8 when using 128 bit instructions.

If you have an actual example which refutes this feel free to post it.

savantu, axel & giant

I deleted additional posts concerning the core count on the techware linpack scores. Why? Because these posts had nothing at all to do with my article which says:

The problem with this data is that the article does not make clear exactly what the test configuration is.

We can infer that Barcelona is dual since the motherboard is dual. We could also infer that 5150 is dual since 5160 was dual. But this is not stated explicitly.

So, we either have a comparison where the author is correct and Barcelona is faster or we have Barcelona using twice as many cores to achieve a similar ratio to what we saw at Anandtech.

If you have something to say about the core counts that is related to my article rather than just trolling then feel free to post it.

ho ho & azmount

Sometimes I forget that processor function is complex and that most people don't have the same understanding. At least you are trying to understand how processors work. That also makes it clearer how the reviewers could make the same mistakes in interpreting the information.

others

I've also deleted duplicate posts that cover raw numbers versus ratios of production. I really don't need half a dozen posts which all say that Intel's raw numbers for Penryn will be higher than AMD's K10 numbers. This should be obvious and has already been stated.

"The funny thing to me is AMD knows they are in trouble on the desktop and are now trying to make a new segment for themselves."

So I guess for the same price and lower performance you'd rather buy an Intel dual-core, but never an AMD tri-core?

It is a segment for AMD itself but also a segment that's not addressed by anyone else.

Abinstein

So I guess for the same price and lower performance you'd rather buy an Intel dual-core, but never an AMD tri-core?

A few questions...

1. How do we know pricing?

2. They are already losing money so how low can they price these?

Let alone any further reduction or pricing pressure against current/future Dual-cores is a bad move.

3. Why the "never an AMD tri-core"?

If it is truly better than my Quad or my previous Dual-cores for gaming (World in Conflict looks amazing, no benefit for BF2) I am all over it.

It is a segment for AMD itself but also a segment that's not addressed by anyone else.

Because they just made it up!

-----------------

But, if you read my comment, you would have seen that my opinion is...Intel will end up having have a Quad-core at it's price point just for more pressure.

This is my opinion, not fact, but I do not believe that Intel will sit back and just let this happen.

Preliminary pricing for the new Penryn based Xeons at Dailytech show a few Quad-cores under the current $266 Q6600, so there could possibly be better performing 45nm parts with the additional cache and equal pricing by then if tri-cores are priced near the $266 mark.

scientia

"This would also mean that AMD's 45nm has slipped by a quarter."

When was the last time AMD said anything about releasing its 45nm CPUs and when was the release supposed to take place? Also how will it affect you prediction that AMD will catch up with Intel by the time 32nm comes?

"Nehalem's transistor count obviously shows a big reduction in cache."

I'd say 1M L2 per core and 4M L3 shared between four cores.

"Roughly, it looks very similar to Clovertown with the extra transistors accounting primarily for IMC and CSI"

I would expect replacing two FSB connections with one CSI connection and an IMC might not need all that many transistors.

There were also talks about 8-core Nehalem. It seemed as it was supposed to be native, not MCM solution. At the die size of roughly 400mm it should be doable considering latest GPUs are even bigger than that. Nearly 1.5 billion transistors in a single die will be rather impressive, so far only Itanium has done it thanks to huge cache.

"Presumably you interpret this as double precision rather than 128 bit"

I interpret it as the title of the table said: double precision (64bit per number, two DP numbers in one register) SSE floating point. Most SSE instructions run in 128bit XMM registers. Things that run in 64bit MM aka MMX registers don't have much to do with SSE floating point calculations.

"But, nonetheless you could still naively think that C2D is twice as fast for double precision."

Are you claiming that I said Core2 is twice as fast as K10? Where exactly did I do that?

I only said that Core2 is twice as fast as older CPUs, including K8 and P4, when running most SSE instructions. In case of some instructions the difference could be more than 2x.

"If you have an actual example which refutes this feel free to post it."

To me it seems as you just tried to prove I was wrong at some thing that I've never even claimed. Also I can't see what I should refute it as your own example shows Core2 and Barcelona should run double precision SSE twice as fast as older CPUs do.

Scientia said...

Sometimes I forget that processor function is complex and that most people don't have the same understanding. At least you are trying to understand how processors work. That also makes it clearer how the reviewers could make the same mistakes in interpreting the information.

You didn't understood my argument. I'm not saying that the third SSE unit in C2 ins't what it is. No. My argument is that numbers show C2 being faster on absolute SSE performance, which doesn't matches your claim that on SSE C2 and K10 are nearly equal (clock for clock).

C2 has three SSE units, those units are not equal but that is not what maters, what maters is how good do they perform together.

I think I'm going to hold on discussing this mater until we have more tests, this seems logical to me, simply not enought data so far.

Scientia said...

Then it's good I have comments from someone like you who speaks for the masses.

Weeellll, thank you. Thank you so much.

You didn't understood my argument.

Why am I not surprised ? :P

I'm not saying that the third SSE unit in C2 ins't what it is. No. My argument is that numbers show C2 being faster on absolute SSE performance, which doesn't matches your claim that on SSE C2 and K10 are nearly equal (clock for clock).

C2 has three SSE units, those units are not equal but that is not what maters, what maters is how good do they perform together.

I think I'm going to hold on discussing this mater until we have more tests, this seems logical to me, simply not enought data so far.

Your grasping at straws.Both Core and K10 are designs which can sustain 4 DP flops per cycle.Does that mean they have the same SIMD throughoutput ?

Not at all.As you correctly say Core has 3 SSE units , K10 has 2 , all are 128bit.Does that mean the units are the same ? They aren't the same on a single chip , not to mention on Core and K10.

The fact is simple : Core SSE units are far more powerful than K10s units.Sandra tests show this , it is a best case scenario.

In real world K10 often offer similar of better performance.This isn't because it has better SSE units , but because it can feed them better.The IMC comes in handy.

FP apps are usually BW starved and K10 has 2x the memory BW of Core.Had the Core have an IMC it would fly past K10 in FP apps.

End of story.

Ho Ho

I'm not sure how else to explain to you about SSE. K8 has the same number of SSE execution units as C2D; the only difference is that C2D's are wider. It simply is not possible for C2D to execute more 64 bit instructions than K8.

"Also how will it affect you prediction that AMD will catch up with Intel by the time 32nm comes?"

That isn't my prediction; that is AMD's schedule. I would assume that we can guess more about AMD's adherence to their schedule when they produce their own 32nm test SRAMs.

"I'd say 1M L2 per core and 4M L3 shared between four cores."

That is certainly possible. Like I said, I think 8 MB's (same as Clovertown) is a good rough estimate.

"I would expect replacing two FSB connections "

Two? Oh, you mean one on each die. No, this isn't likely to be smaller since Nehalem also increases bandwidth. I'd say about the same die area.

"with one CSI connection and an IMC might not need all that many transistors."

Another interesting thing is that even though Nehalem has fewer transistors it actually has more die area. Yes, Nehalem is larger than Penryn. Nehalem's second problem is that trading cache transistors for logic transistors burns up more power.

So, with fewer transistors Nehalem will actually be larger and less efficient.

scientia

"It simply is not possible for C2D to execute more 64 bit instructions than K8."

What kind of 64bit instructions are you talking about now? Still MMX? If you didn't notice then I said several times that I'm talking about double precision floating point instructions executed in XMM registers.

Did K8 execute twice as many double precision FP SSE instructions as P4? If no then my point still stands that Core2 can execute twice as many DP FP instructions as K8 since it executes them twice as fast as P4. Even your own MULDP example showed that.

"Two? Oh, you mean one on each die. No, this isn't likely to be smaller since Nehalem also increases bandwidth. I'd say about the same die area."

What kind of logic is that? Why should increased bandwidth also increase the number of used transistors? Is CSI connection considerably more complex than FSB connection?

Also I said "not too much more" and meant that CSI+IMC together will probably not be a lot bigger than two FSB connections. I'm quite sure it will be bigger but not much.

"Another interesting thing is that even though Nehalem has fewer transistors it actually has more die area."

Can you link to the article that mentions die area? I haven't yet read about it. Although it is quite logical it can have bigger die as cache takes less room than logic.

"So, with fewer transistors Nehalem will actually be larger and less efficient."

Less efficient in what? Power per transistor, performance per watt or something else? Comparing the performance and transistor counts of K8 and K10 one can say that K10 is less efficient also.

I would expect replacing two FSB connections with one CSI connection and an IMC might not need all that many transistors.

LOL hoho, have you already seen the size of AMD IMC/NB?

Or in your language, have you already seen the size of one Intel chipset NB?

There were also talks about 8-core Nehalem. It seemed as it was supposed to be native, not MCM solution. At the die size of roughly 400mm it should be doable considering latest GPUs are even bigger than that. Nearly 1.5 billion transistors in a single die will be rather impressive, so far only Itanium has done it thanks to huge cache.

Ho ho it is really you?

Itanium is very little, represents 0,1% of Intel production? Beside cache is "easy" to make if its for low demand products.

Also you already forgot my post that most of Intel volume is in the specs cut processors.

Only in the quad cores and high end dual cores a full core is used, quad cores account to 2% according o Intel, and about 10% for the dual cores (maybe not that much since Intel mobile CPUs are almost all 5xxx versions with 2MB cache).

Besides the 8 core is MCM for sure or a version with HT enabled.

FP apps are usually BW starved and K10 has 2x the memory BW of Core.Had the Core have an IMC it would fly past K10 in FP apps.

Anandtech table shows that AMD “only” has 10,6GB/s memory bandwidth and Intel “just” 21GB/s memory bandwidth, are you sure they are memory BW starved?

And for the all that say that Intel could do lower clocked quad cores still with 8MB L2. Have you see the price of one full 4MB L2 dual core processor? I think you do not. Intel isn’t a charity company. I just can’t see Intel doing money losing products…

Besides I just don’t know how you guys know that quad cores with 4MB L2 don’t work. And how you guys know the performance, if we never saw one?

aguia

"LOL hoho, have you already seen the size of AMD IMC/NB? "

IIRC it was quoted to be around 100M transistors in size. Penryn MCM quads have around 820M transistors vs ~580M for Clovertows with 50% less cache. Assuming that Nehalem will have total of 8M cache it will have around 150M additional transistors besides removing FSB connections to spend on general core improvements, IMC and parts of NB functionality. I'd say it is quite plenty.

"Or in your language, have you already seen the size of one Intel chipset NB?"

Barcelona doesn't have full chipset in its CPU and neither will Nehalem. Also I wouldn't be surprised if the entire NB die isn't filled with useful transistors. The NVIO chip in G80 isn't either but it has to be relatively big to be able to connect enough pins to it.

"Itanium is very little, represents 0,1% of Intel production? Beside cache is "easy" to make if its for low demand products."

That was exactly my point that previously that high transistor count was achieved by cramming a whole lot of cache to the die. Nehalem should have less than half the die made up from cache and it will have considerably more market share than Itanium.

"Also you already forgot my post that most of Intel volume is in the specs cut processors."

You do know that Intel has separate die for 2M L2 cores? All of E6xxx use 4M of L2. All E4xxx have 2M of cache on die fully used. Probably only E2xxx use half the cache of E4xxx and single cores use only one core from a die. I wouldn't be so sure that E2xxx and celerons make up most of the volume.

"Only in the quad cores and high end dual cores a full core is used, quad cores account to 2% according o Intel, and about 10% for the dual cores"

Anything you can show that would back you up?

"Besides the 8 core is MCM for sure or a version with HT enabled."

I'm not 100% sure about MCM but news so far have said it will not be MCM. Also it was said that 8-core CPU will have a total of 16 visible cores with HT enabled.

"Anandtech table shows that AMD “only” has 10,6GB/s memory bandwidth and Intel “just” 21GB/s memory bandwidth, are you sure they are memory BW starved?"

Where does Intel have such a bandwidth? With FSB it is impossible to achieve. Also in 2P configurations AMD has around 10.6GB/s bandwidth per socket whereas Intel has the whole bandwidth shared between all sockets.

"And for the all that say that Intel could do lower clocked quad cores still with 8MB L2. Have you see the price of one full 4MB L2 dual core processor?"

E6550 for $175.99 (E6600 is considerably more expensive but is discontinued). At roughly the same clock speed Q6600 costs around $100 more. Intel seems to have discontinued producing lower speed dualcores with 4M of L2. If it would have one it would cost around $140-150, 1.8GHz one would be around $120.

"Besides I just don’t know how you guys know that quad cores with 4MB L2 don’t work"

They will work, just that with less cache they need to access memory more often and FSB can become a bottleneck.

http://www.tgdaily.com/content/view/33913/118/

AMD is simply AT LEAST 1 year behind...

Where does Intel have such a bandwidth?

Intel 2S Processors

Maybe some mistake from Anandtech?

At roughly the same clock speed Q6600 costs around $100 more. Intel seems to have discontinued producing lower speed dualcores with 4M of L2. If it would have one it would cost around $140-150, 1.8GHz one would be around $120.

Exactly what I said. Intel won’t have products were they lose money doing them that’s why Intel seems to have discontinued producing lower speed dualcores with 4M of L2..

They will work, just that with less cache they need to access memory more often and FSB can become a bottleneck.

Have you seen any comparison of one E4xxx (2MB) VS E6xxx (4MB) using the same FSB speed, where the 2MB version lose by a huge amount?

aguia

"Maybe some mistake from Anandtech?"

Not a mistake but misinterpretation. That seems to be the sum of FSB bandwidths but memory bandwidth is a lot less than that.

"Intel won’t have products were they lose money doing them"

I seriously doubt they would loose money. Just that they won't probably want to create too many CPUs with only small differences. If they would loose money on selling 4MB CPUs cheap then why does each chip in Q6600 cost only around $140 instead of >$170? It cannot be because on savings on packaging as there isn't really a big difference between quadcore and lowest-end Celeron in terms of cost.

"Have you seen any comparison of one E4xxx (2MB) VS E6xxx (4MB) using the same FSB speed, where the 2MB version lose by a huge amount?"

No, because 2MB is mostly enough for dualcores. Also 2MB versions are clocked lower so they won't do as much work per time unit as faster clocked CPUs leading to less usage.

A bit OT but I remembered Abinstein once said that Intel cannot use Barcelona-like cache architecture because of some AMD patents. Now that Intel has talked about "multi level caches" with Nehalem I wonder if Abinstein was wrong or Intel does something completely different from AMD that won't have patent problems.

Another mistake of his I saw on amdzone was that he claimed associativity must be a multiple of 16 and that is clearly wrong as Penryn will have its L2 with 24-way associativity. Also lower than 16-way associativity has also been used many times before.

Small mistake but I think he would like to know about them. I'd post it on amdzone but I guess I'm banned from there or their forum software is completely broken, I'm not sure what is the reason I can't properly log in.

Not a mistake but misinterpretation.

Mine or theirs?

That seems to be the sum of FSB bandwidths but memory bandwidth is a lot less than that.

If that’s the case why didn’t they do the same with the Intel 4S systems, or with the 2S/4S AMD systems since those have independent FSB/IMC bandwidth?

aguia, its their mistake and I have no ideas why did they mess things up as they did. AMD has 10.6GB/s bandwidth per socket and for 2P it should mean 21.2GiB/s, not what they listed.

mo

"Nahelem was BOOTED UP in WINDOWS!!!!"

... and it ran some rendering task: link. You can see 2P quadcore with 16 CPUs with half of them thanks to SMT. Quite impressive for a chip that is three weeks old I'd say. What did AMD show three weeks afeter first Barcelonas? Heck, it didn't show much a few weeks before launch!

What do you guys think?

-Intel Estimated SPECfp performance-

Those numbers seem quite reasnoable considering the CPU and FSB clock speeds.

savantu

"Core and K10 are designs which can sustain 4 DP flops per cycle."

True.

"Does that mean they have the same SIMD throughoutput ?"

According to Intel and AMD it does. The throughputs are listed in the optimization guides and these are nearly identical.

"Not at all."

You should then explain to Intel that their guide is wrong.

"As you correctly say Core has 3 SSE units , K10 has 2 , all are 128bit."

This is incorrect. Going over the 128 bit instructions shows that K10 is nearly identical to C2D in terms of 128 bit execution with, again, some small group of instructions being performed by both C2D's third SSE unit and K10's third execution unit.

"The fact is simple : Core SSE units are far more powerful than K10s units."

This has not been shown.

Correction: 'had you noted removed' should have been had you not removed

I should check my own posts before I submit them.

The Spec_FP scores for Harpertown are interesting. It'll be interesting to see a full suite of benchmarks of 3.2Ghz Harpertown vs. 2.5Ghz Barcelona come November.

scientia wrote

enumae

Your point about the volume of tri-core is of course correct in terms of downgraded quad dies. But you need to keep this in perspective. The number of Kentsfields that Intel has sold to date is tiny.

scientia

Do you have a link to a document that backs up your Kentsfield claim? What is tiny in this context? Please post a link.

Mo said:

Greg,

First of all, Intel demonstrated a working Nehalem. It booted windows as well as OSX. And the chip just came off about 3 weeks ago so it's VERY early and it's already booting OS. So they did not just show a Die.

The thing is I have no problem admiting that Intel either did a paper launch or a VERY soft launch.

Amd has launched a product, AMD has YET to ship a stepping that it will say it's using (BA).

I'd rather have AMD do the launch when BA is ready...but obviously we both know that another delay would have been devastating. It was obviously better for them to do a paper launch.

Intel constantly gets the heat for launching products that are not available to the market.

But as soon as AMD does it, it's no longer a paper launch? and we have to consider rules of thumb?

Hope you see where I am coming from.

Greg, you realize that if Azmount, Giant, hoho, Axel stopped posting here, this blog would just die off. Someone has to question sci when he makes a claim that doesn't stand....right?

giant said:

For people who do want to see the Nehalem demo in video, here is the link:

webcast

It's in the Pat Gelsinger webcast, towards the end.

giant

You ask why I have deleted posts in this thread. There have been a number of reasons.

The first post I deleted was Aguia's. I deleted it because it was nothing but a long copied list of Microsoft applications, few of which had anything to do with benchmarking.

I deleted Axel's post about Linpack. The factual parts were fine but I'm not going to spend my time correcting his mistatements about my article. If you want to correct something I've said then state what I actually said. I get really, really tired of people who do posts where they claim to disagree with me and then only repeat what I've already said, or people who claim to disagree and then state their disagreement to something I never said.

If you have an opinion, fine. If you want to state facts, great. But, if you claim that you are disagreeing with something I've said then state what I said correctly.

I have tolerated a great deal from posters such as having people say that they are posting at roborat's blog. Contrary to what you might think, saying here on my blog that you are also posting to roborat's blog is not a public service; it is simply rude. And, not surprisingly, repeating it over and over is no less rude. Do I really have to explain to you that comments calling me a fanboy or suggesting that I take down my blog or saying that my blog is worthless are also rude?

Yes, I know that in your eyes I am such a blog tyrant who just cannot understand how great and wonderful Intel processors are and just can't quite grasp that AMD will go bankrupt any day now. Let me acquaint you with reality.

Unlike a forum there is only a single thread here. If comments get off topic then this thread becomes cluttered. Yet, I have tolerated when people post cherry picked pro-Intel news here. These items are not usually on topic so I have no reason to allow it. This doesn't exactly fit with being a blog tyrant does it?

You can give your opinion as whatever you like. I'm not going to delete your posts as long as you aren't trolling. If you honestly don't understand what trolling is, it would be something like, "Everything AMD makes is crap. Their processors suck. BK!!"

You can disagree with me all you like; just have some manners. I've never deleted a post where someone said, "I disagree with you.", "I think you are wrong." or "I don't think that is correct." However, why should I tolerate posts that start off with "You're such an idiot/fanboy/liar/etc." These will likely be deleted.

The fact is that when people point out genuine errors in my articles, I correct them. I've done this many times when either someone pointed out something or I realized an error myself. Telling me here, on my blog that I lie about things will definitely get your post deleted. If you think that is unfair then so be it.

Obviously Gutterat didn't understand my post so let me be more clear. Any further posts saying that you are posting at Roborat's blog should be done at Roborat's blog. Mentioning that in your post here will get it deleted regardless of how thoughtful, how factual, or how on topic the rest of your post is.

Axel

"The bottom line is that my post was completely on-topic and not a troll and you still deleted it. Most importantly it provided factual evidence and links for other readers to follow to prove to themselves that the logic behind your blog entry was fatally flawed."

Okay, I'll hunt up your deleted post and see if what you just said is true.

axel

You said: 1. The configuration for the Barcelona test system is listed. It's a PSSC A2400 2P server box with two Opteron 2347 Barcelona processors. That's a total of eight cores.

I said: We can infer that Barcelona is dual since the motherboard is dual.

Okay, we agree.

You said: 2. The Xeon 5150 is a dual core Woodcrest processor designed only for DP systems. The only Merom-based Xeon processors to support 4P are the Xeon 7200 and 7300 CPUs.

4. Therefore the Xeon 5150 system in the Techware test was a DP system, with a total of four cores.

I said: We could also infer that 5150 is dual

We agree again.

You said: 5. Therefore Techware committed an obvious blunder and their credibility is called into question.

I said: So, we either have a comparison where the author is correct and Barcelona is faster or we have Barcelona using twice as many cores to achieve a similar ratio to what we saw at Anandtech.

Sounds pretty close to what you said. The only difference that I can see is that I mention the author's being wrong as a possibility whereas you assume it.

However, you then go on to say:

You said: 6. Therefore the entire foundation for your latest blog entry is flawed, and hence so are any conclusions you made.

Your points agreed with mine yet you then claim (falsely) to disagree with me. I also can't imagine by what twisted logic you claim that your agreement with me proves I am wrong. So, your aggressive statement is nothing more than trolling.

Intel Penryn desktop processors listed

Notice that the QX9650 (3.0 Ghz) with 1333Mhz FSB draws 130 watts. Notice too that this is the only desktop Penryn for 2007.

So, what happened to the 3.33 Ghz chips at launch that everyone was talking about? What happened to the 1600Mhz FSB's? What happened to the multiple bins? And, what happened to the big reductions in power draw for 45nm? Notice that the 65 watt chips have half the cache (which is less than Kentsfield has now).

When I said before that Intel's initial yields for 45nm were not that great and that volumes would be low no one wanted to believe it. Do you believe it now?

Scientia

Your points agreed with mine yet you then claim (falsely) to disagree with me... So, your aggressive statement is nothing more than trolling.

I submitted that post before you revised your entry. Previously you had assumed that the Xeon system in the Techware review was using eight cores and then drew some erroneous conclusions from it. It's actually Savantu who pointed it out, but you deleted his post as well even though he didn't troll at all.

As far as my post being a bit "aggressive", it's because I was ticked off that you'd deleted my previous post (check your history, it was far from a troll post and you still deleted it). If you wish for people to be polite, it's simple: Respect their freedom of speech and stop deleting posts. Otherwise you'll get people wound up like you did with Mo.

I do agree with one conclusion you made: "We'll have to conclude that the slow speed of the code on AMD processors is simply due to poor code optimization."

Scientia

Notice too that this is the only desktop Penryn for 2007.

Which is more than was expected. Previous rumors indicated that only Harpertowns would be launched in 2007, with Yorkfield in Q1 2008. So Intel has clearly pulled the launch in to spoil the Phenom party.

And, what happened to the big reductions in power draw for 45nm?

It's simple, the high binning 3.0 GHz quadcores fit into the 80W TDP envelope and will be sold as Xeons, because that's where power efficiency matters most and the ramp will be the earliest. Later in Q1 as 45-nm yields improve, you can expect the desktop space to begin to see lower TDP parts.

axel

You and savantu don't know when to take a compliment. You both pointed out a problem with my original article so I corrected it (which I can assure you I don't do for every poster who claims to make a correction).

Now again, I wouldn't be so stupid as to suggest that a processor was doing well if it took twice the cores to gain a small advantage. My initial assumption was that this was so obvious that no one (including the author) would make this mistake.

However, I realized that there was no real reason to assume that the Techware author knew what he was doing (since I haven't seen other reviews by him). And it is curious that if you did assume twice as many cores then the score was close to what Anandtech got. So, I changed the article and gave his test scores no weight.

Scientia

...Notice too that this is the only desktop Penryn for 2007.

It was mainly rumored that the only launching Penryn Desktop part for 2007 would be the Extreme processors, but lets get a prediction from you... Do you believe that Intel will release a faster part if AMD is able to match performance?

So, what happened to the 3.33 Ghz chips at launch that everyone was talking about?

There is no way to respond without looking as if I have a major bias... But does Intel need it?

What happened to the 1600Mhz FSB's?

When has that ever been stated for the desktop?

The only person or source that hinted at that was Abinstein here on your blog.

What happened to the multiple bins?

How many do you want?

Intel having a potential of seven new processor available in January almost covers all existing (Q*** and E6***) products.

And, what happened to the big reductions in power draw for 45nm?

Any Quad-core labeled Extreme has a TDP of 130W. Look at the QX6700 (130W), and the Q6700 (95W).

As for the others, couldn't they be using the same numbers to make it easier for OEM's/system builders?

Notice that the 65 watt chips have half the cache (which is less than Kentsfield has now).

Those are Dual-cores not Quads.

...Do you believe it now?

No... While Volume might be low there is nothing showing that yields are the cause.

My opinion is that there won't be any shortage of Penryn Quad-cores, my reasoning is in Intel's Q2 conference call...

enumae said...

"It was mainly rumored that the only launching Penryn Desktop part for 2007 would be the Extreme processors,"

What about Intel's 3.33Ghz demo?

"but lets get a prediction from you... Do you believe that Intel will release a faster part if AMD is able to match performance?"

No, it is looking like Intel won't be able to hit 3.33Ghz at a normal TDP in Q1.

"Those are Dual-cores not Quads."

My mistake. But that then makes it worse since these are only half the 130 watt TDP. Again, where is the much heralded 45nm power savings?

Now, are you claiming that these chips will completely replace the 65nm chips in Q1 or are you claiming that Intel will keep the 45nm prices high enough to maintain demand for the older chips?

Scientia

What about Intel's 3.33Ghz demo?

Read my comment above.

...But that then makes it worse since these are only half the 130 watt TDP. Again, where is the much heralded 45nm power savings?

No offense meant by this,but your comment is ridiculous. All of Intel's core based dual-cores are at 65W, all the way from the Pentium 2140 to the E6850.

If you really wan't to draw conclusions go ahead, but I feel you would be wrong.

65W is the base TDP used by Intel for Dual-cores, simple as that.

Now, are you claiming that these chips will completely replace the 65nm chips in Q1

No not at all, just that I don't feel there will be any shortage of 45nm quad-cores in Q1 or when released.

...or are you claiming that Intel will keep the 45nm prices high enough to maintain demand for the older chips?

I think we could posibly see a price cut sometime around launch, but who am I...

Sci.

Intel is putting the binning where it's needed the most. The server Space.

The 3.0ghz, 1600FSB Xeon is only 80W.

The enthusiast chip (which wasn't even suppose to launch this year) is 130W dew to lower binning.

Does the enthusiast care about TDP? hardly, it's one of their last options to consider. This same exthusiast who will be running 2 8800GTXs or two 2900HDs hardly cares about TDP.

You like to talk about server space a lot, so let's look at the Xeon 45nm which is 80W at 3.0ghz.

scientia

"You both pointed out a problem with my original article so I corrected it (which I can assure you I don't do for every poster who claims to make a correction)."